I am try to implement a simple Poisson Factorization

theta ~ Gamma(a, b)

beta ~ Gamma(c, d)

x ~ Poisson( theta . beta )

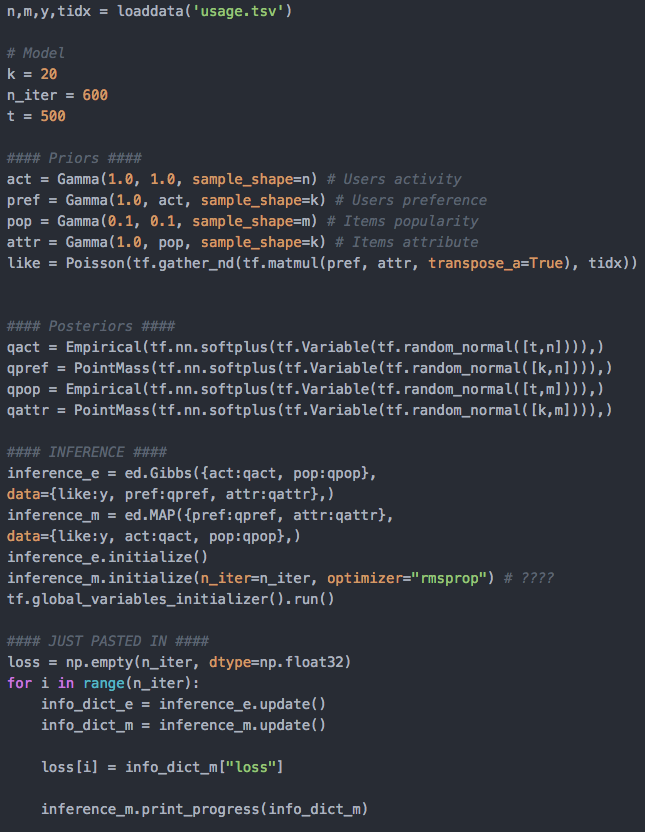

My codes:

from edward.models import Poisson, Gamma

import edward as ed

# Toy dataset

M = (np.random.random((300,200))>0.9).astype(int)

# Model

theta = Gamma(1.*tf.ones([300, 10]), 1.)

beta = Gamma(1.*tf.ones([200, 10]), 1.)

X = Poisson(tf.matmul(theta, tf.transpose(beta)))

# Inference

q_theta = Gamma(tf.nn.softplus(tf.Variable(tf.random_normal([300, 10]))),

tf.nn.softplus(tf.Variable(tf.random_normal([300, 10]))))

q_beta = Gamma(tf.nn.softplus(tf.Variable(tf.random_normal([200, 10]))),

tf.nn.softplus(tf.Variable(tf.random_normal([200, 10]))))

# Inference

inference = ed.KLqp({theta: q_theta, beta: q_beta}, data={X: M})

inference.initialize(n_print=30, n_iter=300)

tf.global_variables_initializer().run()

for t in range(inference.n_iter):

info_dict = inference.update()

inference.print_progress(info_dict)

if t % inference.n_print == 0:

print '\tloss: %f'%info_dict['loss']

Why I got the increasing loss? and ended up nan

1/300 [ 0%] ETA: 743s | Loss: 1969714.125 loss: 1969714.125000

30/300 [ 10%] ███ ETA: 23s | Loss: 5959987.500 loss: 5910604.000000

60/300 [ 20%] ██████ ETA: 10s | Loss: 27670352.000 loss: 29153144.000000

90/300 [ 30%] █████████ ETA: 6s | Loss: 335439648.000 loss: 430829216.000000

120/300 [ 40%] ████████████ ETA: 4s | Loss: 5386785280.000 loss: 5760766464.000000

150/300 [ 50%] ███████████████ ETA: 2s | Loss: 88767381504.000 loss: 92775710720.000000

180/300 [ 60%] ██████████████████ ETA: 1s | Loss: nan loss: nan

210/300 [ 70%] █████████████████████ ETA: 1s | Loss: nan loss: nan

240/300 [ 80%] ████████████████████████ ETA: 0s | Loss: nan loss: nan

270/300 [ 90%] ███████████████████████████ ETA: 0s | Loss: nan loss: nan

300/300 [100%] ██████████████████████████████ Elapsed: 3s | Loss: nan